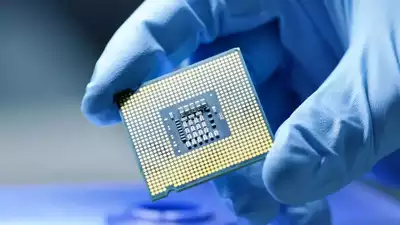

The phrase “your arbitrage is my opportunity” captures the essence of Meta’s bold strategy to develop in-house chips for AI training. This move is a significant part of Meta’s broader plan to minimize its reliance on third-party hardware and lower its overall infrastructure costs. Recent reports from Reuters reveal that Meta has begun testing its proprietary chips, which were successfully built in collaboration with Taiwan’s TSMC, the world-renowned semiconductor manufacturer. These chips represent a major leap forward, as Meta has already deployed them for AI inference tasks, specifically in personalizing content for individual users. However, the company’s ultimate goal is to expand their use to the more complex and resource-intensive task of training AI models by 2026.

Meta’s decision to build its own chips is driven by a long-term vision to reduce its significant infrastructure expenditures. As the company intensifies its focus on AI tools to fuel growth, this strategy aims to optimize its operations and rein in costs. Meta has forecasted a total expenditure between $114 billion and $119 billion for 2025, with a substantial $65 billion allocated toward capital expenditures, largely dedicated to building up its AI infrastructure. This commitment underscores Meta’s belief in the transformative potential of AI and its ambition to lead in this space.

The new training chip being developed by Meta is specifically designed as a dedicated accelerator for AI tasks. Unlike general-purpose processors, such as graphics processing units (GPUs), which are typically used for a variety of computing tasks, these accelerators are built exclusively for AI workloads. This specialized design allows the chips to be more power-efficient, an important factor when dealing with the massive computational demands of AI training. By creating chips that are optimized for these tasks, Meta hopes to make its AI operations more efficient and cost-effective.

Even if some consumer-facing applications of generative AI, such as chatbots, ultimately fail to live up to the hype, Meta can still derive value from these chips in other areas. One of the most promising applications for AI at Meta is in improving its content recommendations and ad targeting. Meta’s advertising revenue, which makes up the vast majority of its income, could see significant boosts from incremental improvements in the targeting capabilities of its AI systems. Advertisers are always seeking better results, and even small advancements in precision could translate into billions of dollars in additional revenue for Meta.

Despite facing challenges in its hardware efforts, such as underwhelming results from its Reality Labs division, Meta has proven successful in building strong hardware teams. Over the years, the company has made considerable strides in hardware development, including successes like the Ray-Ban AI glasses. However, internal reports suggest that Meta’s hardware innovations have yet to achieve the world-changing impact that executives had envisioned. The company’s virtual reality (VR) headsets, for example, still sell in relatively small numbers, amounting to only a few million units annually. Nevertheless, Meta’s CEO, Mark Zuckerberg, has long been determined to reduce the company’s dependence on third-party platforms like Apple and Google by developing its own hardware.

For years, major tech giants have been spending billions to acquire GPUs from Nvidia, the dominant player in the AI chip market. Nvidia’s GPUs have become the industry standard for AI processing, thanks to their high performance and the availability of the CUDA software toolkit, which makes it easier to develop AI applications. In fact, Nvidia’s dominance is such that, in one recent quarter, nearly 50% of the company’s revenue came from just four customers. Despite this concentration of customers, Nvidia’s growth has been impressive, but it also highlights the fact that many of these companies are now seeking to reduce their dependence on Nvidia by developing their own chips.

Meta’s decision to join this trend and build its own AI chips is not an isolated move. Amazon and Google have already ventured down similar paths with their own custom processors. Amazon has developed the Inferentia chips, designed for machine learning inference tasks, while Google has been working on its Tensor Processing Units (TPUs) for years. Both companies have made significant investments in their respective chip architectures as they strive to reduce their reliance on Nvidia and lower their AI infrastructure costs.

This shift toward in-house chip development reflects the broader trend in the tech industry of seeking to cut out the middleman and take greater control over critical components of the AI supply chain. By designing and manufacturing their own chips, companies like Meta hope to lower costs, increase performance, and gain a competitive edge. However, these investments come with risks, as the companies must commit to long-term research and development efforts, often with little immediate return. Investors may be patient for only so long before they demand proof that these investments are paying off.

Nvidia’s position as the leader in the AI chip market has been increasingly challenged by the rise of competitors like AMD, which also offers GPUs for AI workloads. However, Nvidia’s combination of high-performance hardware and the CUDA toolkit has made it difficult for competitors to dethrone it. Nvidia’s CEO, Jensen Huang, has expressed confidence that the company’s growth will continue, noting that data center providers are expected to spend a trillion dollars on infrastructure over the next five years. This continued investment could provide a steady stream of demand for Nvidia’s chips well into the 2030s.

At the same time, there are concerns about Nvidia’s ability to sustain its growth in the long run. The concentration of its customer base among a few major companies, like Meta, Amazon, and Google, raises the possibility that these companies could eventually reduce their reliance on Nvidia’s chips by developing their own solutions. Moreover, the development of more efficient AI models, such as China’s DeepSeek, could also threaten Nvidia’s dominance, as these models may reduce the need for high-powered hardware.

Despite these challenges, Nvidia remains a formidable player in the AI chip market. The company’s ability to offer not just the hardware but also the software tools needed to develop AI applications on its chips has helped it maintain a strong position. The CUDA toolkit, in particular, has become a key enabler for developers working with AI. As a result, Nvidia has built a loyal customer base that relies heavily on its products.

For companies like Meta, however, the goal is to reduce their dependence on Nvidia and other third-party chip providers. Developing in-house chips is seen as a way to gain greater control over AI infrastructure and reduce costs in the long run. Meta’s push into chip development is just one example of how tech giants are looking to build more self-sufficient AI ecosystems. By taking ownership of their chip production, these companies aim to unlock new efficiencies and, ultimately, drive greater profitability.

In addition to reducing costs, in-house chip development offers companies like Meta the potential to fine-tune their hardware to meet their specific needs. Custom-designed chips can be optimized for the unique demands of AI training and inference, allowing companies to maximize performance and efficiency. This level of customization is difficult to achieve with off-the-shelf chips, which are often designed for more general-purpose use.

Despite the potential benefits, developing in-house chips is not without its challenges. The process requires significant upfront investment in research and development, as well as the establishment of manufacturing capabilities. Companies must also navigate the complexities of chip design and ensure that their products are competitive in terms of performance and cost. These hurdles mean that only the largest tech companies, like Meta, Amazon, and Google, have the resources to pursue such ambitious chip development projects.

Meta’s focus on building its own chips reflects a larger trend in the tech industry toward vertical integration. As the demand for AI processing power continues to grow, companies are increasingly looking to control every aspect of the AI supply chain, from hardware to software. This strategy allows them to optimize their operations and gain a competitive advantage. However, it also raises questions about the future of companies like Nvidia, which have long dominated the AI chip market.

In the coming years, the AI chip market is likely to become even more competitive, with more companies investing in custom hardware solutions. Meta’s push into chip development is just one example of how the landscape is shifting. As AI continues to play a central role in the growth strategies of major tech companies, the race to develop the most powerful and efficient chips will only intensify. For now, Meta’s bet on in-house chips represents a bold and potentially lucrative move that could shape the future of AI infrastructure.

Viesearch - The Human-curated Search Engine

Blogarama - Blog Directory

Web Directory gma

Directory Master

http://tech.ellysdirectory.com

Viesearch - The Human-curated Search Engine

Blogarama - Blog Directory

Web Directory gma

Directory Master

http://tech.ellysdirectory.com